ALLISTAIR COTA

DESIGN PORTFOLIO

TurtleBot Contests

Every year, the Mechatronics Systems: Design & Integration course tasks teams with programming a robot to perform a set of tasks within different contests. In my fourth year of Mechanical Engineering, the teams were tasked with programming the TurtleBot 2 for three contests. The TurtleBot is a low-cost personal robot system that utilizes the open-source Robot Operating System (ROS). In terms of the main hardware, the TurtleBot is a differential drive robot using the iClebo Kobuki Base, and the Microsoft Kinect camera. Linux (Ubuntu) laptops were used for programming and deploying the contest programs onto the TurtleBot, and programming was done primarily in the C++ language.

Autonomous Robot Search of an Environment

The first robot contest involved programming the TurtleBot to autonomously drive around an unknown environment while using the ROS gmapping library to dynamically produce a map of the environment using the Kinect sensor and wheel odometry. The gmapping library utilizes simultaneous localization and mapping (SLAM) to produce the map. The team had to devise an algorithm that would enable the robot to explore as much of the environment as possible. While every corridor and entry in the environment maze was captured by the map, a weakness of the team's algorithm did not allow the complete capture of very unique objects such as the round trashcans that were placed in the maze. Another lesson learned was that the maze did not have to be traversed so many times as significant drift was eventually captured due to the error associated with the wheel odometry method.

Team

-

Samson Chiu

-

Allistair Cota

-

Jamal Stetieh

-

Kaicheng Zhang

Finding Objects of Interest in an Environment

The goal of Contest 2 was for the TurtleBot to navigate an environment to find and identify the image tags on five boxes placed at different locations in the environment. The robot was first localized using the "2D Pose Estimate" feature on RViz, through which the user inputs the robot's starting pose in a known map and then uses Monte Carlo Localization to localize the robot while the user manually pushes the robot along the boundaries of the environment. The coordinates of the object were provided by the teaching assistant in a text file at the contest itself, hence the team ensured the code was robust enough to navigate to any arbitrary set of coordinates.

To minimize the time taken to complete the contest, the code contained an implementation of the Nearest Neighbor Algorithm to determine the order of the boxes to be visited by the TurtleBot. For object identification, the OpenCV SURF feature detection library was used to capture the appropriate frame from the Kinect video feed and identify the tags, and their identities were printed to the laptop terminal. This contest was a success as the TurtleBot correctly identified all the image tags, and also recorded the joint fastest time among the class (1 min 20 seconds) which was well under the maximum time limit of 5 minutes.

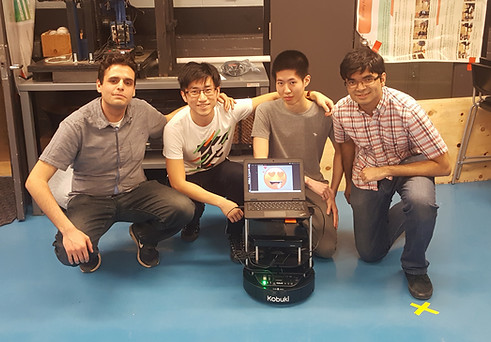

"Follow Me" Robot Companion

Contest 3 involved developing an interactive TurtleBot. The TurtleBot has to follow a member of the team while he/she moved within the environment. In addition, the TurtleBot had to interact with the user via emotions, specifically two primary/reactive emotions and two secondary/deliberative emotions. Each emotion would be shown in response to its distinct stimulus. A panel of judges (comprising of the course instructor and teaching assistants) had to be able to guess each emotion during the contest for full marks. Teams were allowed to use a variety input and output modes, so long as they utilized the standard hardware (no external hardware was allowed).

The team used the Kinect depth data to determine object to robot distance, along with the Kinect video feed and Open CV SURF feature detection library to perform image identification. In addition, the Kobuki Base bumpers were utilized to detect stimulus. In terms of outputs, velocity commands to the Kobuki base, audio and emoticon images were used to show each emotion. Being inspired by R2-D2 from Star Wars and the real world commercial pet robot AIBO, the team decided to give the TurtleBot its unique "voice" by developing a tone using the Caustic synthesizer, and playing different note patterns with the same synthesizer settings to distinguish between the sound for each emotion. In addition, the emoticon facial expressions were modified subtly to convey the change in emotion. The team got full marks for the contest implementation but acknowledges that the more complex architectures could be designed given more time.

The team and the TurtleBot: (From left to right) Jamal, Kaicheng, Samson, and myself

The final practice run for Contest 2 in the practice environment. Note that box pose coordinates were modified on the day of the contest.

Contest 3: Emotion demonstration